I spent today, pretty much by accident, at a forum-style discussion of the issues surrounding the Australian government’s proposal to filter the Internet access of all Australian citizens. I say “by accident” because the invitation to attend an “Internet Filtering and Censorship Forum” appeared in my email a couple of weeks ago, and without reading it too carefully, I thought it was going to be an educationally focused discussion about the filtering issues that schools face. That would have been useful and interesting, but I didn’t realise that the discussion would actually be centred on the bigger issue of the Australian government’s proposed Internet filtering scheme. I’m glad I went.

Look, there is no argument from me that we need to keep our children safe online. We absolutely need to protect them from the things that are clearly inappropriate, obscene or undesirable. I remember the first time I realised my son had seen things online that I didn’t think he should see, and it’s a horrible feeling. But this proposal by Senator Stephen Conroy (the Minister for Broadband, Communications and the Digital Economy) is unrealistic, unworkable, naive and just plan stupid.

Look, there is no argument from me that we need to keep our children safe online. We absolutely need to protect them from the things that are clearly inappropriate, obscene or undesirable. I remember the first time I realised my son had seen things online that I didn’t think he should see, and it’s a horrible feeling. But this proposal by Senator Stephen Conroy (the Minister for Broadband, Communications and the Digital Economy) is unrealistic, unworkable, naive and just plan stupid.

Let me put you in the picture. In the leadup to the last Australian federal election, the Australian Labor Party (then in opposition) made a series of promises to try and get elected (as they do). One of those promises revolved around a deal done with the powerful Christian Right, in which the Christian Right essentially said “we will give you our preference votes in exchange for you promising to put ISP-level Internet filtering in place”. The Labor party, in a desperate attempt to get elected, said “yes of course we will do that!”. Well, they went on to win the election and now they are in the unenviable position of having to meet an election promise that is just plain stupid. The minister in charge of all things digital, Stephen Conroy, is either the most honorable politician at keeping his promises or the most ignorant, pigheaded, obstinate politician I’ve come across. I suspect a bit of both.

The plan is to legislate for all Australian Internet Service Providers to supply mandatory content filtering for their customers, at the ISP level. This would mean that every Australian ISP would have to maintain whitelists and blacklists of prohibited content, and then filter that content before it gets to their customers. It means that every Australian internet user would have a filtered, censored, internet feed, removing any content that the government deems inappropriate. Many comparisons have been made to the filtering that currently takes place in China, where the Chinese government controls what their people see. I don’t think it’s quite that bad (yet), since the Australian proposal is only only really talking about blocking content that is actually illegal (child pornography, etc) but the fact is that filtering is a non-exact science, and there is little doubt that there will be many, many webpages that get either overfiltered of underfiltered. Those of us in the education sector who have been dealing with filters for years, know exactly how frustrating this can be.

The forum today, which was held at the Sydney offices of web-savvy law firm Baker and Mackenzie, raised many important issues surrounding the filtering proposal. There were many experts in the room from organisation such as the Electronic Frontiers Australia, the Internet Industry Association, the Law School of the University of NSW, the Brooklyn Law School, the Australian Classification Board, the Inspire Foundation, and many others. Many of these organisations had a chance to make a short presentation about their perspective on the government’s proposal, and there was a chance for some discussion from the larger group. It was a great discussion all round.

This is a big issue. Much bigger than I realised. I’d read a bit about it in the news, but hadn’t given it that much thought. On the surface, a proposal to keep children safe and to block illegal content seems like a reasonable idea. In practice, it is a legal, political and logistical nightmare.

Here are just a few of the contentious issues that the Conroy proposal raises…

What are we actually trying to achieve? What do we really want to block? Stopping kids getting to a few naughty titty pictures is quite a different proposition from preventing all Internet users from accessing pornographic content. Are we trying to just protect children, or are we trying to prevent adults from seeing things that they ought to be able to have the right to choose whether they see or not? The approaches for achieving each of these goals are probably quite different.

Who will make the decisions about what is appropriate or not? There are many inconsistencies in the way the Classification Board rates content. There have been numerous examples where something that is rated as obscene is later reviewed and found to be only moderately offensive. Who decides? Why should a government be allowed to make decisions about what people are allowed to see or not see. In Australia, unlike the US, we do not have a constitution that guarantees a right to free speech, so we cannot even use the argument that our government has no right to control what we see. They can, and they are trying to enforce it.

Won’t somebody think of the children! Sure, filters are designed to keep children safe. We all want that. But what if I’m a childless couple? If I have no children in my household, why should I have to be filtered and restricted for content that is aimed at adults? As an adult, I should be able to access whatever content I like, including the titty pictures if that’s what floats my boat. As an adult, I don’t need the government telling me what I can and can’t access online, especially if it has nothing to do with children.

How do you filter non-http traffic? Traffic moves around the internet using all sorts of protocols… ftp, p2p, https, email, usenet, bit torrent, skype, etc. I was told by a reliable source today that there are hundreds of different internet protocols, and many new ones are being created all the time. Filters generally only look at regular http traffic (webpages) and will therefore have little chance of catching content that uses other protocols. Usenet News Groups are a huge source of pornographic material, yet they will be unaffected by the proposed filters. There is nothing to stop child pornographers exchanging content over peer-to-peer networks, bit torrent, skype or even as email attachments… and these would all go undetected by the filters.

Do we bend the trust model until it breaks? Although the http protocol is pretty easy to inspect for its contents, the https protocol is not. The https protocol, otherwise known as Secure http, is the same one used by banks, online merchandisers and so on to facilitate secure online ecommerce transactions. Sending traffic via https instead of regular old http is trivial to do, so one would expect that if the filters eventually happen, then the child pornographers will just start to transmit their stuff using https instead. This will lead to one of two possible situations… either the filters will continue to ignore http traffic (as they do now) and the pornographers carry on with business as usual making the whole filter thing pointless; or instead, the people who create the filters get smart enough to come up with a way to inspect https traffic as well. As clever as this might be, the whole idea of https traffic is that it is encrypted to the point where the packet contents cannot be seen. To design filters that were smart enough to inspect encrypted packets, would, if it happened, also break the entire trust model for online ecommerce. If https packets could be inspected for their contents there would be a major breakdown in trust for other transactions such as Internet banking, ecommerce and so on. Would you give your credit card details if you knew that https packets were being inspected by filters?

Computers are not very good at being smart. There is no way that all Internet content can be inspected by human beings. It’s just too big, and growing too fast. There are about 5000 photos a minute being added to Flickr. About 60,000 videos a day being added to YouTube. There are thousands of new blogs being started every month. Content is growing faster than Moore’s Law, and there is no way that content can be inspected and classified by humans at a rate fast enough to keep up with the growth. So we turn to computers to do the analysis for us. Using techniques like heuristic analysis, computers try to make intelligent decisions about what constitutes inappropriate content. They scan text for inappropriate phrases. They inspect images for a certain percentage of pixels that match skin tones. They try to filter out pictures of nudity, but in the process they block you from seeing pictures of your own kids at the beach. Computers are stupid.

The Internet is a moving target. The Internet is still growing much too fast to keep up with it. There are new protocols being invented all the time. Content is dynamic. Things change. If I have a website that is whitelisted as being “safe” and ok, what’s to stop me from replacing the content with images that are inappropriate? If just the URL is being blocked (and not the content) then that makes the assumption that the content will not change after the URL is approved. A website could easily have its content replaced after its URL is deemed to be safe.

The technical issues are enormous. The internet was designed originally to be a network without a single point of failure. When the US military built the Internet back in the late 60s, its approach was to build a network that could route around any potential breakdowns or blockages. Yet when the filtering proposal is mapped out, the Internet is seen as a nice linear diagram that flows nicely from left to right, with the Cloud on one side, the end user on the other and the ISP in the middle. The assumption is that if you simply place a filter at the ISP then all network traffic will be filtered through it. Wrong! The network of even a modest sized ISP is extremely complex, with many nodes and pathways. In a complex network, where do you put the filter? If there is a pathway around the filter (as there almost certainly will be in a network designed to not have a single point of failure) then how many filters do you need to put in? It could be hundreds! The technical issues facing the filtering proposal are enormous, and probably insurmountable to do effectively.

Filters don’t work. The last time the government issued an “approved” filter (at the user end) it was cracked by a 13 year old kid in minutes. We were told the inside story of this today and some say that this was an unfair claim since the kid was given instructions by someone online, but the point remains that the filter was easily cracked. Over 90% of all home computers run in administrator mode by default, so cracking a local filter is just not that hard. Schools that filter will tell you that students who really want to get around the filters do so. They use offshore proxies and other techniques, but filters rarely stop someone who really wants to get past them. All they do is hurt the honest people, not stop the bad ones.

Australia, wake up! Conroy’s plan is a joke. It’s an insult. It’s nothing but political maneuvering to save face and look like the government is doing something to address a problem that can’t be effectively addressed. Conroy is doing all this to keep the Christian right happy in exchange for votes. He won’t listen to reason, and he won’t engage in discussion about it. He is taking a black and white view of a situation that contains many shades of grey. The problem of keeping our kids safe online is important and needs to be addressed, but not like this. Please take the time to write to him and tell him what you think. Don’t use email, it counts for nothing (even if he is the Minister for Broadband, Communications and the Digital Economy!) Write to him the old fashioned way… it’s the only format that politicians take any notice of.

The irony of the underlying politics and the involvement of the Christian Right is the disgraceful history of child abuse by the Church… Catholic, Anglican, you name it. There is case after case after case of children being abused and taken advantage of by priests and other religious clergy. If Senator Conroy is serious about “evidence based research” and wants to legislate against the most likely places that children get molested and abused, maybe he should be doing something about putting “filters” on the catholic church. Or what about banning the contact of small children with older family members… because statistically that’s where most child molestation takes place. Stupid idea? Of course it is, but it makes more sense than trying to impose a mandatory “clean feed” of Internet access for all Australians.

It’s a complete joke and a bloody disgrace.

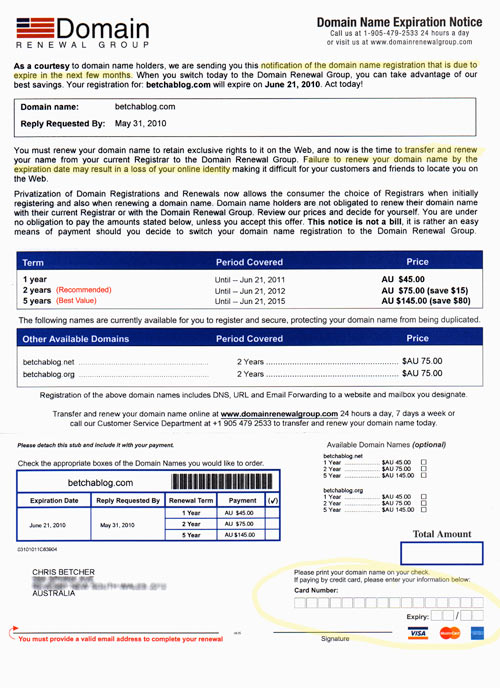

If there’s one thing I hate it’s when people assume I’m an idiot and try to rip me off.

If there’s one thing I hate it’s when people assume I’m an idiot and try to rip me off.

I’m a huge believer in the notion of trust and respect as the primary drivers in the relationship between student and teacher. People have occasionally told me that I’m just incredibly naive about this, but all I can talk from is my own experience, and in my own experience, building relationships of trust, respect and genuine care between student and teacher is the foundation upon which all “policy” rests on in my classroom. I realise that school administrators will feel a need for something a little more concrete than this, but any policies, AUPs or guidelines that aren’t based on this first rule are simply not sustainable in my view.

I’m a huge believer in the notion of trust and respect as the primary drivers in the relationship between student and teacher. People have occasionally told me that I’m just incredibly naive about this, but all I can talk from is my own experience, and in my own experience, building relationships of trust, respect and genuine care between student and teacher is the foundation upon which all “policy” rests on in my classroom. I realise that school administrators will feel a need for something a little more concrete than this, but any policies, AUPs or guidelines that aren’t based on this first rule are simply not sustainable in my view. Look, there is no argument from me that we need to keep our children safe online. We absolutely need to protect them from the things that are clearly inappropriate, obscene or undesirable. I remember the first time I realised my son had seen things online that I didn’t think he should see, and it’s a horrible feeling. But this proposal by

Look, there is no argument from me that we need to keep our children safe online. We absolutely need to protect them from the things that are clearly inappropriate, obscene or undesirable. I remember the first time I realised my son had seen things online that I didn’t think he should see, and it’s a horrible feeling. But this proposal by